Alerts

Meta is adding more parental controls for teen AI use as part of its ongoing effort to make its platforms safer for younger users. After years of aggressively pushing AI chatbots across Instagram, Facebook, and Messenger, the company is finally giving parents new tools to monitor and limit how their teens interact with these AI characters.

The move comes as Meta faces increasing scrutiny over AI-driven conversations that have raised concerns about inappropriate and romanticized interactions with minors. With growing public pressure and regulatory attention, this update signals a more cautious approach to AI use among teens.

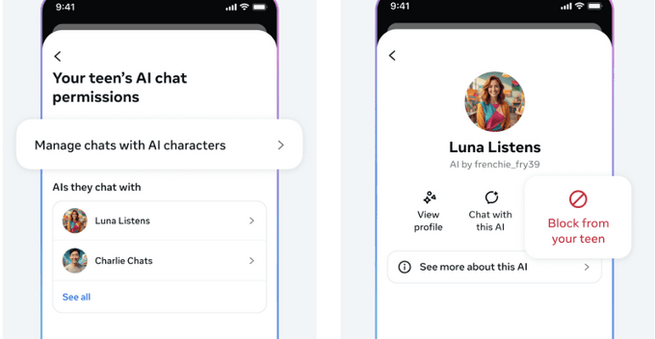

According to Meta’s latest announcement, parents will soon be able to block their teens from speaking with AI chatbots entirely or restrict access to specific AI characters. The new feature will roll out on Instagram early next year, Instagram head Adam Mosseri and Meta Chief AI Officer Alexandr Wang revealed in a joint blog post.

However, Meta’s built-in AI assistant will remain accessible. The company says it will continue to “offer helpful information and educational opportunities,” promising to include age-appropriate protections to ensure safer interactions.

In addition to these restrictions, Meta is adding more parental controls for teen AI use by providing what it calls “insight” into how teens are using AI. While the company hasn’t detailed exactly what this feature will look like, early descriptions suggest parents will get a high-level summary of the topics their teens are discussing with AI chatbots.

Meta says the goal is to help parents start thoughtful conversations with their kids about technology, digital safety, and AI interactions—rather than simply imposing blanket restrictions.

Mosseri and Wang expressed optimism that these updates will “bring parents some peace of mind” as their teens explore AI tools. But parents will need to be patient: the new controls won’t roll out until early next year, and they’ll initially be limited to Instagram users in English-speaking regions — specifically the US, UK, Canada, and Australia.

Meta plans to expand the parental controls to other apps like Messenger and Facebook in future updates, promising to “share more soon.”

This update marks another step in Meta’s broader attempt to rebuild trust after facing backlash for its handling of teen safety and privacy. The company has previously been accused of prioritizing engagement over well-being, and the addition of AI parental controls could be seen as a response to both public criticism and upcoming regulatory frameworks around child safety online.

By giving parents more authority over how their teens use AI, Meta is signaling a shift toward transparency and responsible innovation—a move likely designed to reassure both users and regulators that the company is serious about protecting young people in the age of artificial intelligence.

𝗦𝗲𝗺𝗮𝘀𝗼𝗰𝗶𝗮𝗹 𝗶𝘀 𝘄𝗵𝗲𝗿𝗲 𝗿𝗲𝗮𝗹 𝗽𝗲𝗼𝗽𝗹𝗲 𝗰𝗼𝗻𝗻𝗲𝗰𝘁, 𝗴𝗿𝗼𝘄, 𝗮𝗻𝗱 𝗯𝗲𝗹𝗼𝗻𝗴. We’re more than just a social platform — from jobs and blogs to events and daily chats, we bring people and ideas together in one simple, meaningful space.