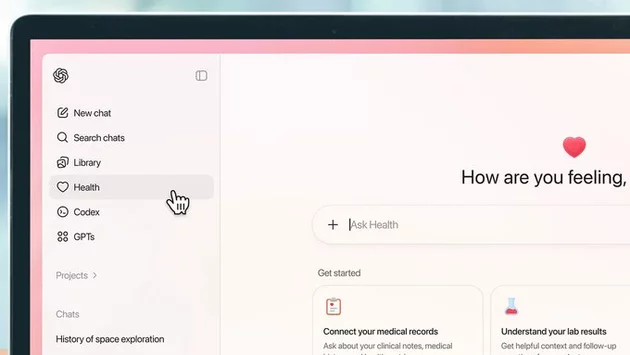

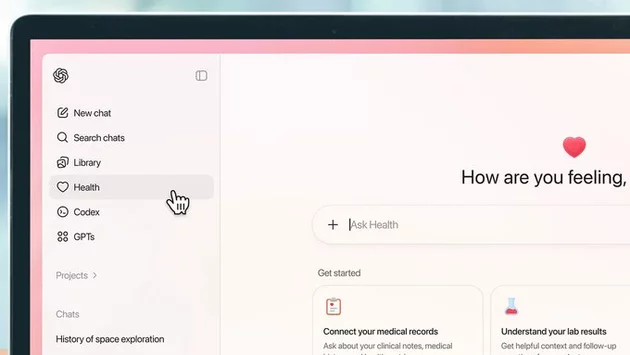

OpenAI recently unveiled ChatGPT Health, a feature designed to bring AI directly into your medical routine. The platform promises a virtual clinic experience, processing electronic medical records (EMRs) and integrating fitness and health app data. Users can expect tailored advice on lab results, diet, exercise, and doctor visit preparation. With more than 230 million health-related queries each week, an AI-driven health assistant seemed inevitable. However, the question many are asking is simple: can AI truly be trusted with sensitive medical information?

For many, the allure of ChatGPT Health lies in convenience. Instant access to personalized health insights could revolutionize how we manage our wellness. Yet the same speed and accessibility raise concerns. Even OpenAI warns users about potential inaccuracies. While AI can summarize and interpret data efficiently, it still struggles with hallucinations—instances where it confidently provides incorrect information. Relying on it for anything beyond basic guidance can be risky, leaving users to weigh convenience against accuracy.

The promise of enhanced security and privacy has not quelled all doubts. Uploading personal health data to an AI platform requires unprecedented trust. Medical records, fitness data, and sensitive lab results are among the most private types of information a person has. Even small lapses in data handling could have significant consequences. For cautious users, these privacy questions outweigh the potential benefits of AI-assisted medical guidance.

Despite these concerns, ChatGPT Health offers impressive functionality. The AI can contextualize lab results, provide dietary suggestions, and outline preparation steps for medical appointments. Integration with fitness trackers and health apps adds a personalized layer rarely seen in digital health tools. Yet the underlying AI limitations mean that recommendations should not replace professional medical advice. Users must remember that this is an assistant—not a licensed healthcare provider.

Trust remains the biggest barrier for adoption. OpenAI's reputation for innovation is strong, but users naturally question whether AI can handle the responsibility of interpreting sensitive health data. Stories of AI hallucinations, coupled with high-profile privacy breaches across the tech industry, have made caution the default stance. Many potential users are waiting for clear evidence that ChatGPT Health can operate securely and reliably before integrating it into their routines.

The launch of ChatGPT Health marks a significant milestone in AI-driven healthcare. It represents the intersection of convenience, personalization, and digital health innovation. However, the balance between usefulness and security is delicate. Users must weigh the potential for improved health insights against the risks of data exposure and inaccurate recommendations. Until trust is established, skepticism is likely to guide adoption.

As AI continues to evolve, tools like ChatGPT Health may redefine medical engagement. Continuous improvements in data privacy, accuracy, and transparency will determine whether users embrace or avoid these platforms. For now, the promise is tantalizing, but cautious optimism remains the prevailing sentiment. The success of AI in healthcare will depend not just on technology, but on the confidence it inspires in those who rely on it most.

ChatGPT Health Sparks Excitement and Privacy ... 0 0 0 0 2

2 photos

𝗦𝗲𝗺𝗮𝘀𝗼𝗰𝗶𝗮𝗹 𝗶𝘀 𝘄𝗵𝗲𝗿𝗲 𝗽𝗲𝗼𝗽𝗹𝗲 𝗰𝗼𝗻𝗻𝗲𝗰𝘁, 𝗴𝗿𝗼𝘄, 𝗮𝗻𝗱 𝗳𝗶𝗻𝗱 𝗼𝗽𝗽𝗼𝗿𝘁𝘂𝗻𝗶𝘁𝗶𝗲𝘀.

From jobs and gigs to communities, events, and real conversations — we bring people and ideas together in one simple, meaningful space.

Comment