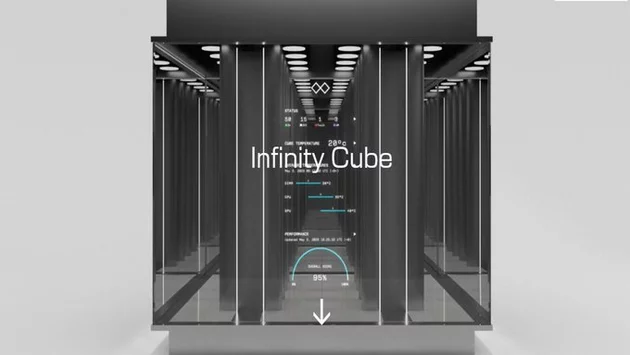

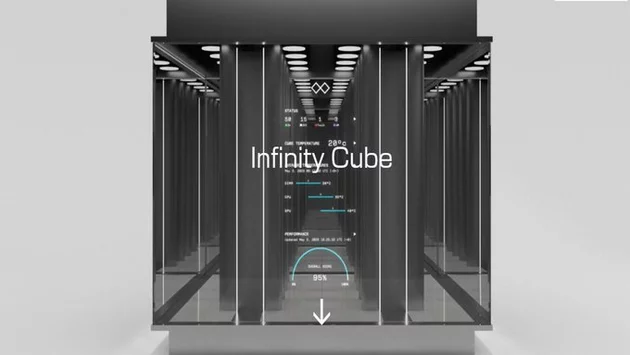

Nvidia partners are reimagining data centers with the Infinity Cube, a 14-foot AI supercluster designed to combine immense power with a sleek, glass-encased design. This concept promises to “beautify” high-performance computing while packing massive computational resources into a compact footprint. The Infinity Cube can integrate multiple Omnia supercomputers, giving data centers a new way to maximize density and efficiency without compromising on cooling or performance.

The system’s headline feature is its memory: a staggering 86TB of DDR5 ECC registered RAM. Alongside this, the Cube includes 224 B200 GPUs and 56 AMD EPYC CPUs, totaling 8,960 cores. For organizations running advanced AI workloads, this represents an unprecedented amount of compute power in a single enclosure.

High-performance computing often struggles with heat management, and Odinn’s Infinity Cube tackles this head-on with a liquid-cooled design. By keeping all 224 GPUs and 56 CPUs within optimal thermal ranges, the Cube ensures consistent performance for even the most demanding AI training tasks. CES 2026 attendees were shown how this cooling system allows for dense compute setups without the usual risk of overheating or throttling, signaling a potential shift in how AI clusters are deployed.

Beyond processing power, the Infinity Cube also impresses with storage capacity. The system includes 27.5 petabytes of NVMe storage, enabling rapid access to vast datasets needed for AI and machine learning workflows. By consolidating storage, memory, and compute into a single visual structure, the Cube offers a streamlined approach to enterprise AI infrastructure that could dramatically reduce footprint and complexity.

The Infinity Cube builds on Odinn’s Omnia supercomputers, which were previously introduced as movable AI systems. By combining multiple Omnia units in one enclosure, the Infinity Cube avoids the throughput limitations that a single system would face. This modular approach allows organizations to scale their AI clusters more efficiently while maintaining high compute density.

Odinn’s approach isn’t just about raw power—it’s also about aesthetics. The glass-enclosed Infinity Cube showcases the internal components, making the typically hidden world of high-performance computing visually striking. This design could make AI infrastructure a centerpiece rather than a backroom necessity, appealing to forward-looking tech companies and modern data centers.

For industries relying on AI, machine learning, and large-scale simulations, the Infinity Cube represents a significant step forward. Its combination of massive DDR5 memory, thousands of CPU cores, hundreds of GPUs, and petabytes of storage in a liquid-cooled, modular system could redefine expectations for data center density and performance. As AI workloads grow more complex, solutions like the Infinity Cube may become essential for competitive organizations.

Nvidia Infinity Cube: 86TB DDR5 in 14ft AI Cl... 0 0 0 3 2

2 photos

𝗦𝗲𝗺𝗮𝘀𝗼𝗰𝗶𝗮𝗹 𝗶𝘀 𝘄𝗵𝗲𝗿𝗲 𝗽𝗲𝗼𝗽𝗹𝗲 𝗰𝗼𝗻𝗻𝗲𝗰𝘁, 𝗴𝗿𝗼𝘄, 𝗮𝗻𝗱 𝗳𝗶𝗻𝗱 𝗼𝗽𝗽𝗼𝗿𝘁𝘂𝗻𝗶𝘁𝗶𝗲𝘀.

From jobs and gigs to communities, events, and real conversations — we bring people and ideas together in one simple, meaningful space.

Comment