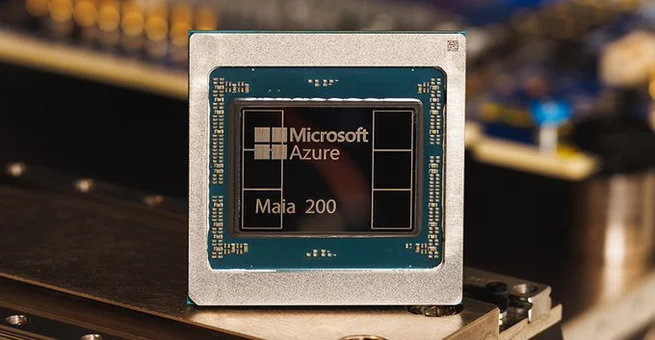

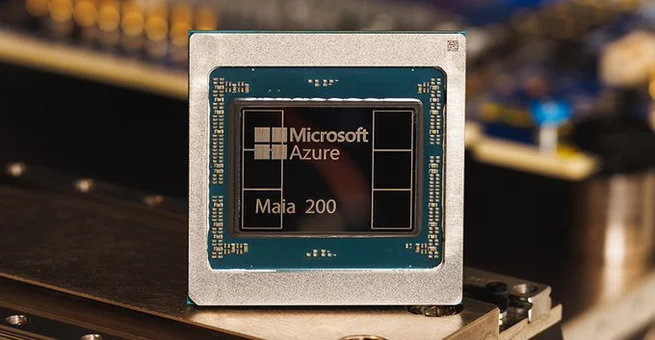

Microsoft Maia 200 AI chip is officially rolling out to data centers, answering a question many have been asking: how serious is Microsoft about building its own AI hardware to compete at the highest level? The short answer is very. The new chip is designed to run massive AI models more efficiently, cut long-term infrastructure costs, and give Microsoft tighter control over how advanced AI services are delivered. Early details highlight major performance gains, improved efficiency, and a clear signal that Microsoft wants to lead, not follow, in AI acceleration.

The Maia 200 AI chip is Microsoft’s second-generation in-house AI accelerator, built using an advanced 3nm manufacturing process. Each chip packs more than 100 billion transistors, all optimized for large-scale AI workloads such as training and inference for modern foundation models. This level of density allows the chip to handle today’s most demanding models while leaving room for future growth.

Unlike general-purpose processors, Maia 200 is purpose-built for AI tasks. That focus matters because AI workloads behave differently from traditional computing jobs. By tailoring the chip specifically for AI, Microsoft can squeeze out more performance per watt and reduce operational costs across its cloud infrastructure.

Microsoft is being unusually direct with performance comparisons this time around. According to the company, Maia 200 delivers roughly three times the FP4 performance of Amazon’s third-generation Trainium chip and exceeds the FP8 performance of Google’s seventh-generation TPU. These claims position Maia 200 as a serious contender in the AI chip race.

This shift in tone is notable. When Microsoft launched its first Maia chip in 2023, it avoided head-to-head comparisons. Now, the company appears confident enough to put numbers on the table. While real-world benchmarks will ultimately matter most, the messaging alone signals a more aggressive strategy.

One of the key selling points of the Microsoft Maia 200 AI chip is its ability to run extremely large models without compromise. Microsoft says the chip can comfortably support today’s largest production models while maintaining headroom for even more complex systems in the future.

That headroom is crucial. AI models continue to grow in size and capability, often pushing existing hardware to its limits. By designing Maia 200 with future models in mind, Microsoft is trying to avoid frequent hardware redesigns and ensure smoother scaling as AI demands increase.

Microsoft plans

to use Maia 200 to host advanced AI models, including OpenAI’s GPT-5.2, across internal platforms such as Microsoft Foundry and Microsoft 365 Copilot. This tight integration between custom silicon and software services gives Microsoft a strategic advantage.

By controlling both the hardware and the software stack, Microsoft can optimize performance, latency, and cost in ways that are difficult to achieve with off-the-shelf chips alone. For enterprise customers, this could translate into faster AI responses, more reliable services, and potentially lower prices over time.

Beyond raw speed, Microsoft is emphasizing efficiency. The company says Maia 200 delivers around 30 percent better performance per dollar compared to the latest hardware currently deployed in its data centers. That metric is especially important for large-scale AI inference, where costs can quickly spiral.

Efficiency improvements also matter for sustainability. Data centers consume enormous amounts of power, and AI workloads are only increasing that demand. A more efficient chip helps reduce energy usage per task, aligning with broader efforts to make large-scale computing more sustainable.

The launch of the Maia 200 AI chip marks a shift in how Microsoft positions itself against other cloud giants. Instead of quietly building internal tools, Microsoft is now openly showcasing its capabilities and challenging competitors on performance.

This change suggests growing confidence in its silicon team and long-term roadmap. While competitors are also working on next-generation AI chips, Microsoft’s willingness to compare generations directly hints that it believes Maia 200 can stand its ground today, not just in the future.

Microsoft isn’t keeping Maia 200 locked behind closed doors. The company is offering early access to its Maia 200 software development kit for academics, developers, AI labs, and open-source contributors. This move could help build an ecosystem around the chip and accelerate optimization across different AI workloads.

Inviting external experts also strengthens Microsoft’s credibility. Broader testing and feedback can surface real-world strengths and weaknesses faster than internal testing alone, ultimately leading to a more mature platform.

The Microsoft Maia 200 AI chip is more than a hardware upgrade. It represents a strategic commitment to owning critical pieces of the AI stack, from silicon to services. As AI becomes central to productivity tools, enterprise software, and cloud platforms, that control could prove decisive.

If Maia 200 performs as promised at scale, Microsoft will be better positioned to deliver advanced AI features reliably and affordably. For customers, that could mean smarter tools, faster innovation, and fewer limits on what AI can do next.

Microsoft Maia 200 AI Chip Takes Aim at Cloud... 0 0 0 0 2

2 photos

Array