AI Therapy and Privacy Risks: What You Need to Know

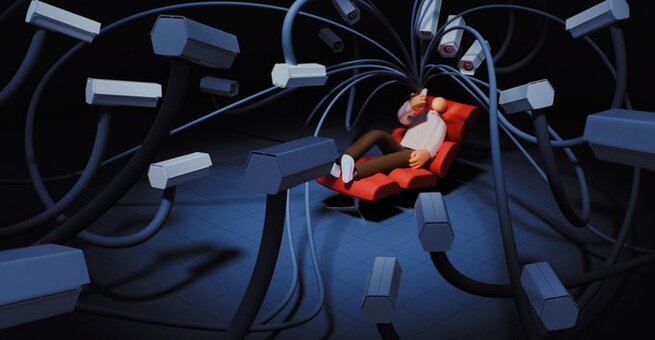

Wondering if AI therapy is safe for your mental health journey? You’re not alone. As virtual therapy apps and mental health chatbots powered by AI gain popularity, many users are asking: Can I trust AI therapy with my private thoughts? The short answer is complicated. While AI therapy tools promise convenience, affordability, and 24/7 support, there's growing concern about how these systems handle deeply personal data—especially in a political environment where surveillance and data misuse are escalating.

AI therapy has surged in popularity through platforms like Meta AI, ChatGPT, and xAI's Grok, with Big Tech marketing these tools as accessible mental health support for those who lack access to traditional therapy. Meta CEO Mark Zuckerberg recently declared, “Everyone will have an AI,” framing it as a personal companion and therapist rolled into one. But behind this helpful façade lies a complex and potentially dangerous ecosystem, where your mental health data could be less private than you think.

While AI therapy apps promise confidentiality, their creators often collaborate with or support governments known for aggressive data collection. In the U.S., this overlap is particularly worrying. On one hand, Big Tech urges users to open up to AI about topics as sensitive as depression, anxiety, gender identity, and trauma. On the other, U.S. agencies are working to build surveillance mechanisms that target exactly this kind of intimate data.

Recent policy shifts have revealed troubling intent. Government bodies like the Department of Government Efficiency (DOGE) are attempting to centralize citizen data across platforms and agencies. Health Secretary Robert F. Kennedy Jr. has pushed for controversial tracking programs involving autism and mental health, hinting at institutional bias and a disregard for civil liberties. The use of AI-generated data as a surveillance tool is no longer science fiction—it’s an imminent threat.

For individuals seeking support from AI therapy tools, this convergence of tech and government raises serious privacy concerns. These AI platforms often ask users to disclose sensitive issues like sexual orientation, trauma history, substance abuse, and political beliefs. Yet few users are aware of where that data ends up—or how it might be used.

While you may assume your private conversation with a therapy chatbot is just that—private—these systems are typically hosted on infrastructure controlled by companies with powerful political affiliations. Elon Musk’s xAI, for example, is not only privately held but also linked to public government operations. Meanwhile, Meta and OpenAI have shown growing alignment with political powers that actively seek to regulate and monitor the behavior and thoughts of U.S. residents.

The risks of AI therapy aren't distributed equally. Marginalized communities—such as immigrants, LGBTQ+ individuals, and people with neurodivergence—are particularly vulnerable. Reports show that protected speech has already been used as grounds for legal action against individuals. Legal immigrants have faced arrests and revoked residencies for expressing views in public forums, while federal agencies now propose building detailed psychological profiles based on wearable devices and digital footprints.

In this landscape, AI therapy becomes a potential tool for state surveillance rather than healing. The data you share could be weaponized in ways that compromise your safety, restrict your rights, or invite targeted harassment.

What makes this issue more pressing is the silent but visible cooperation between Big Tech and political power players. These tech giants aren't just developing AI therapy tools for benevolent reasons—they’re also strategically avoiding regulation, pursuing federal contracts, and aligning their policies with those in power.

Sam Altman, CEO of OpenAI, has advocated for deregulated AI energy development—a policy conveniently aligned with certain government interests. Google, while more reserved, continues to court political favor through quiet lobbying and partnerships. Meta, meanwhile, is doubling down on AI expansion while subtly aligning its policies with government surveillance efforts, ensuring it stays in regulators' good books.

If you’re still interested in using AI therapy apps, it's crucial to choose platforms that emphasize encryption, transparency, and independent operation from major tech firms. Look for tools that are open-source, peer-reviewed, and clear about their data-sharing policies. Use encrypted messaging apps where possible and avoid linking therapy accounts to your social media or primary email.

Additionally, consider hybrid models where licensed therapists supervise AI interactions. These services often have more stringent privacy policies and are held to professional ethical standards.

AI mental health tools should be a safe, judgment-free zone for healing. Unfortunately, they currently operate in a gray area where convenience, privacy, and politics collide. Until clear regulations are in place and companies adopt transparent, user-first policies, trusting an AI with your deepest thoughts is a high-stakes gamble.

Whether you're exploring AI therapy for cost savings, 24/7 availability, or personal comfort, it's critical to ask the hard questions about who sees your data, who owns it, and how it could be used against you. Your mental health deserves support—but not at the cost of your privacy and freedom.

𝗦𝗲𝗺𝗮𝘀𝗼𝗰𝗶𝗮𝗹 𝗶𝘀 𝘄𝗵𝗲𝗿𝗲 𝗿𝗲𝗮𝗹 𝗽𝗲𝗼𝗽𝗹𝗲 𝗰𝗼𝗻𝗻𝗲𝗰𝘁, 𝗴𝗿𝗼𝘄, 𝗮𝗻𝗱 𝗯𝗲𝗹𝗼𝗻𝗴. We’re more than just a social platform — from jobs and blogs to events and daily chats, we bring people and ideas together in one simple, meaningful space.