Google is testing an innovative feature that transforms traditional search results into AI-generated podcasts, enhancing how users consume information. This new capability, called Audio Overviews, appears directly within search results on mobile. Users can now listen to a short, AI-driven discussion that summarizes the key points of their query. The test is currently available through Google Labs and is rolling out to select users in the U.S. If you’ve ever wished your Google Search could talk to you—this is the future in motion.

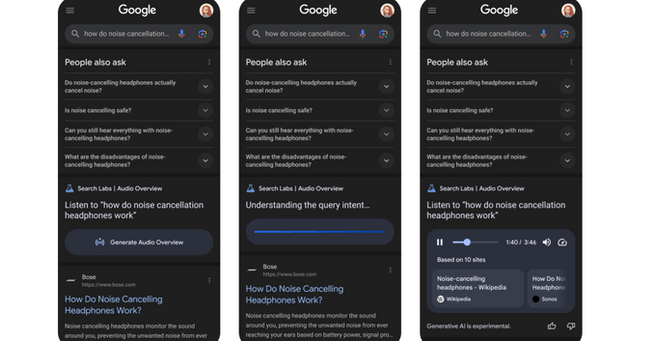

The Google Audio Overview feature brings a conversational twist to the search experience. Instead of reading through multiple links, users can now choose to generate an audio summary of their query—similar to listening to a mini-podcast. When searching questions like “How do noise-canceling headphones work?”, Google displays a “Generate Audio Overview” button just below the “People also ask” section. Tapping this button triggers Google's AI to produce a spoken summary in about 40 seconds.

This audio clip is played directly from a built-in player embedded in the search results. Users can control playback with options to pause, mute, and change playback speed. The AI-generated audio is delivered by two synthetic “hosts,” who explain the topic in an engaging, natural style. Google even links to some of the reference sources below the playback bar, helping users verify the information shared.

Although Audio Overviews are currently only available in English for U.S. users, Google is actively expanding the reach of this feature. The tool is already integrated into other AI-driven Google platforms like NotebookLM and Gemini, allowing users to generate discussions from documents, deep research, or uploaded notes. By embedding Audio Overviews into core search results, Google is not only improving accessibility but also setting a precedent for audio-first search experiences.

The move aligns with Google's broader strategy to infuse generative AI into its entire product ecosystem. By allowing users to hear information hands-free, it caters to on-the-go audiences and boosts content engagement for people who prefer listening over reading. Expect to see more use cases in areas like education, productivity, and assistive tech.

This test may seem like a small UI tweak, but it signals a seismic shift in how we interact with information. Instead of skimming articles or watching videos, users can now listen to curated insights, generated in real time. This could significantly benefit people with visual impairments, busy multitaskers, or anyone looking for a more passive way to absorb knowledge.

Moreover, Google's AI podcast-style summaries could enhance information retention. Studies suggest that listening can improve comprehension and recall compared to skimming text. And because the Audio Overview draws from verified sources, the feature encourages a more trustworthy, efficient search experience. Google is betting big on AI-driven accessibility, and Audio Overviews are a key part of this roadmap.

With the rise of smart speakers, voice search, and digital assistants, Google’s Audio Overviews are right on trend. By combining generative AI, natural language synthesis, and real-time summarization, this feature transforms search into a dynamic, audio-first interface. It opens up new possibilities for publishers, educators, and marketers to reach users in novel ways.

For now, the feature remains in testing and is limited in scope. But if early user feedback is positive, we can expect broader rollout, language support, and possibly even integration with YouTube, Google Podcasts, and Android Auto. Audio Overviews might soon be a default part of our daily searches—making Google feel more like a conversation than a query box.

𝗦𝗲𝗺𝗮𝘀𝗼𝗰𝗶𝗮𝗹 𝗶𝘀 𝘄𝗵𝗲𝗿𝗲 𝗿𝗲𝗮𝗹 𝗽𝗲𝗼𝗽𝗹𝗲 𝗰𝗼𝗻𝗻𝗲𝗰𝘁, 𝗴𝗿𝗼𝘄, 𝗮𝗻𝗱 𝗯𝗲𝗹𝗼𝗻𝗴. We’re more than just a social platform — from jobs and blogs to events and daily chats, we bring people and ideas together in one simple, meaningful space.