Meta AI Chatbot Guidelines Leak Raises Child Safety Questions

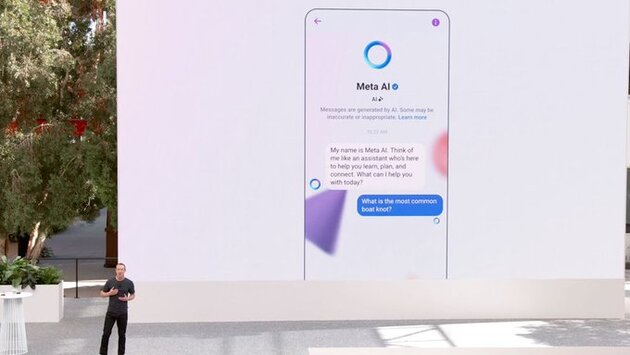

A recent leak of Meta AI chatbot guidelines has sparked widespread concern about online child safety. The leaked document, confirmed by Meta as authentic, revealed instructions for AI interactions that many consider inappropriate, particularly involving children. Users and experts alike are asking how AI moderation can ensure safety when internal guidelines appear to permit sensitive or risky conversations. Understanding these leaked rules is crucial for anyone tracking AI ethics, safety, and content moderation.

Disturbing Details in Meta AI Chatbot Guidelines

Among the most alarming points in the leak are instructions on how chatbots may interact with children. Reports indicate that some guidelines allowed AI to engage in conversations of a romantic or intimate nature, while explicitly forbidding sexual content. While Meta has removed these problematic sections, the revelation shows how complex and sometimes risky AI content moderation can be, especially when guidelines cross boundaries with minors.

Implications for AI Ethics and Child Safety

The leak raises broader questions about AI ethics. If chatbots can deliver potentially harmful content—whether racist, misleading, or inappropriate—under certain prompts, it challenges the trustworthiness of AI systems. Experts stress the importance of creating clear, enforceable rules that protect users, particularly vulnerable populations like children, without stifling AI innovation. Meta’s situation highlights the fine line companies walk between AI capabilities and ethical responsibility.

What This Means for Parents and Users

For parents and everyday users, the Meta AI leak serves as a reminder to monitor AI interactions closely. Ensuring safety means understanding the limits of AI chatbots and advocating for stricter guidelines on sensitive topics. Tech companies must prioritize user protection while maintaining transparency about AI behavior. Following these developments can help the public hold AI platforms accountable and encourage safer online experiences for children.

𝗦𝗲𝗺𝗮𝘀𝗼𝗰𝗶𝗮𝗹 𝗶𝘀 𝘄𝗵𝗲𝗿𝗲 𝗿𝗲𝗮𝗹 𝗽𝗲𝗼𝗽𝗹𝗲 𝗰𝗼𝗻𝗻𝗲𝗰𝘁, 𝗴𝗿𝗼𝘄, 𝗮𝗻𝗱 𝗯𝗲𝗹𝗼𝗻𝗴. We’re more than just a social platform — from jobs and blogs to events and daily chats, we bring people and ideas together in one simple, meaningful space.