Alerts

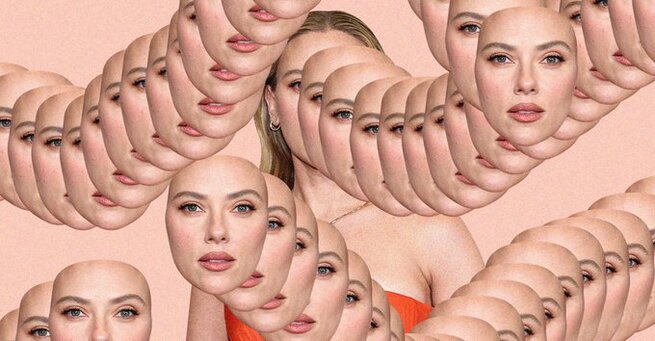

When it comes to artificial intelligence, the next legal frontier is your face and AI itself. As deepfake videos, cloned voices, and digital doubles flood the internet, lawmakers are scrambling to figure out who actually owns your likeness in the age of machine learning.

This battle began in 2023 with a viral AI-generated song called “Heart on My Sleeve.” It sounded uncannily like Drake — but it wasn’t him. The track became the first big test case for how far AI can go when imitating real people. Streaming platforms pulled it down on a copyright technicality, but it wasn’t truly a copyright issue — it was about identity, imitation, and ownership.

Unlike copyright, which is backed by decades of legal precedent and international treaties, likeness rights are a murkier territory. There’s no single federal law in the U.S. governing how AI can use your image or voice. Instead, it’s a messy web of state laws — some protecting celebrities, others offering little to ordinary people.

As AI deepfakes become more convincing, that gap is turning into a massive legal vacuum. States like Tennessee and California have begun proposing “right of publicity” laws that address digital replicas, but it’s a patchwork solution for a global tech problem.

AI tools today can clone your face, voice, and expressions in seconds. They can insert you into a movie scene, make you sing, or even deliver a political message — all without your consent.

That’s why the next legal frontier is your face and AI — because these systems don’t just copy your work, they copy you. The ethical questions go far beyond copyright and land squarely in the realm of personal identity and privacy.

Tech companies are now at the center of this fight. Platforms like YouTube, TikTok, and Spotify are being pushed to create stricter policies around AI-generated content. Some have introduced labels for synthetic media, but enforcement remains inconsistent.

Meanwhile, AI model creators argue that training data should include public images and voices — because, in their view, it’s all “fair use.” But where does fair use end and exploitation begin? That’s the gray area the courts will soon need to define.

For now, legal protection against AI impersonation depends largely on where you live. Until Congress steps in with a unified law, the fight will play out state by state — and case by case.

Still, public awareness is rising fast. Musicians, actors, and everyday users are demanding transparency and consent in how their likenesses are used. If the past few years have been about AI’s creative potential, the next few will be about AI’s legal boundaries.

Because in the end, the next legal frontier is your face and AI — and the question isn’t just who owns your data, but who owns you.

𝗦𝗲𝗺𝗮𝘀𝗼𝗰𝗶𝗮𝗹 𝗶𝘀 𝘄𝗵𝗲𝗿𝗲 𝗿𝗲𝗮𝗹 𝗽𝗲𝗼𝗽𝗹𝗲 𝗰𝗼𝗻𝗻𝗲𝗰𝘁, 𝗴𝗿𝗼𝘄, 𝗮𝗻𝗱 𝗯𝗲𝗹𝗼𝗻𝗴. We’re more than just a social platform — from jobs and blogs to events and daily chats, we bring people and ideas together in one simple, meaningful space.